FASTER TECHNOLOGIES FOR FIRST RESPONDERS

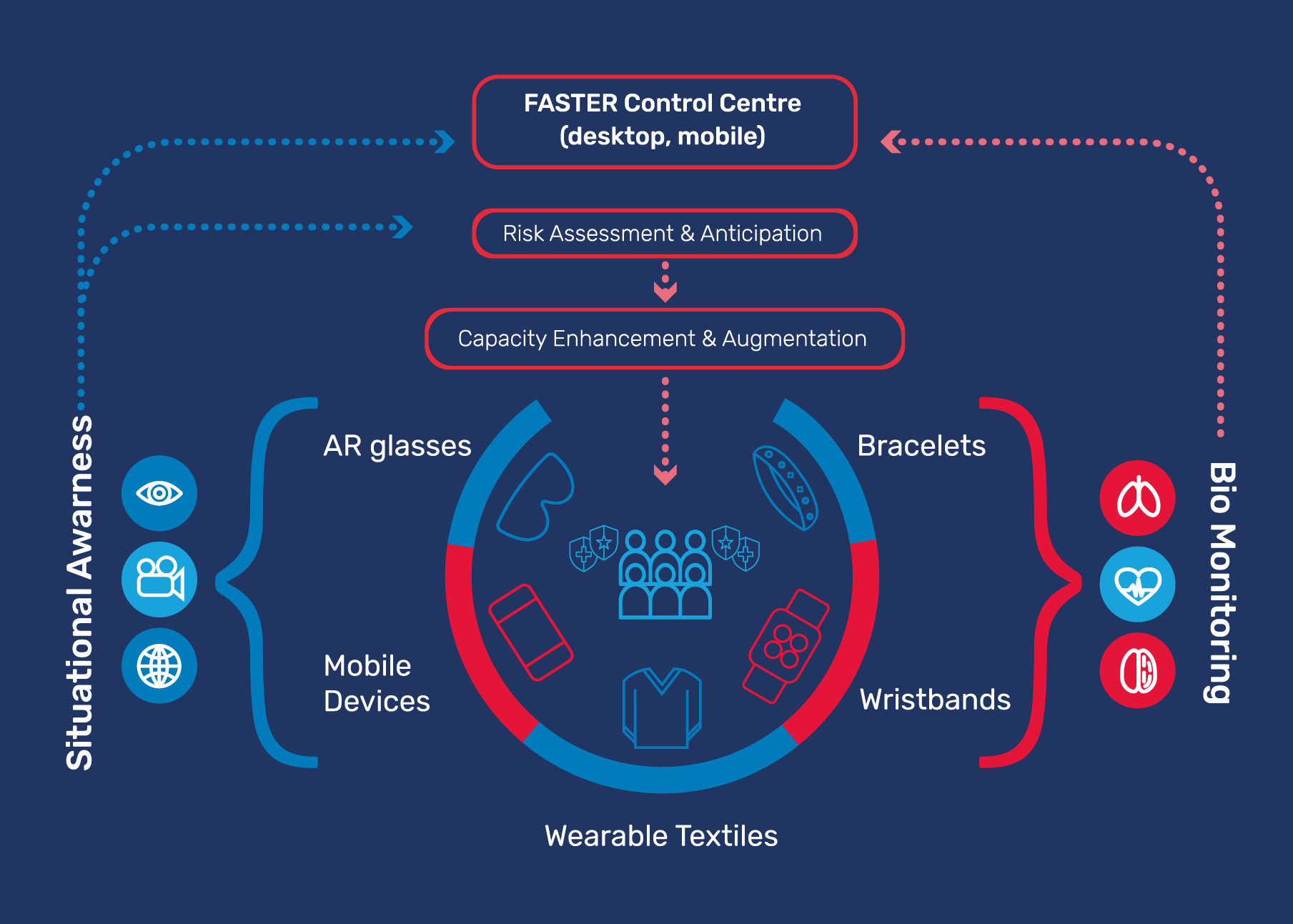

FASTER will develop a set of tools towards enhancing the operational capacity of first responders while increasing their safety in the field. It will introduce Augmented Reality technologies for improved situational awareness and early risk identification, and Mobile and Wearable technologies for better mission management and information delivery to first responders. Body and Gesture based User Interfaces will be employed to enable new capabilities while reducing equipment clutter, offering unprecedented ergonomics. Moreover, FASTER will provide a platform of Autonomous Vehicles, namely drones and robots, aiming to collect valuable information from the disaster scene prior to operations, extend situational awareness and offer physical response capabilities to first responders. FASTER will gather multi-modal data from the field, utilizing an IoT network, and Social Media content to extract, either locally or in the cloud, meaningful information and to provide an enhanced Common Operational Picture to the responder teams in a decentralised way using Portable Control Centres. It will additionally use blockchain technology to keep track of needs and capabilities using smart contracts for maximum efficiency. The whole system will be facilitated by tools for Resilient Communications Support featuring opportunistic relay services, emergency communication devices and 5G-enabled communication capabilities.

Mobile Augmented Reality (AR) for Operational Support:

FThis module aims to offer more efficient situational awareness and decision making to practitioners in critical conditions that require full attention and focus from involved first responders. FASTER aims to provide Augmented Reality (AR) technology to the Civil Protection field units, delivering in real time information gathered from the other FASTER components (e.g. alerts, team status and location, sensor values…), filtering the information and providing targeted content to the AR user. AR will be supplied both through mobile phones and AR glasses (e.g. HoloLens) and will enable first responders to access previously unreachable information in a contextual fashion, by superimposing the data to the real world.

Extended vision technologies using commercial lightweight UAVs:

Many different factors may prevent first responders from reaching and visually inspecting impassable and/or dangerous areas in disaster sites, such as ruins, obstacles, harmful or unknown environmental conditions. FASTER aims to extend first responders’ visual perception by deploying lightweight and camera-equipped UAVs to explore otherwise inaccessible or potentially dangerous areas. These small-factor UAVs will comprise part of the first responders’ gear and will be deployed on demand when and where necessary. Drones are typically used to extend the user’s line of sight by transferring their video stream to the piloting user that flies the UAV in first-person mode. This necessitates the use of a screen, therefore also requiring the user’s focused attention. Instead, FASTER will offer first responders an exocentric X-ray like visualization of occluded areas from their physical viewpoint, thanks to a combination of UAVs with AR see-through devices and advanced 3D scene analysis and modelling algorithms. This will widen their field of view and offer the ability to make obstacles between the UAV and the responder partially transparent. Video streamed from the UAV’s camera will be rendered via an image-based rendering pipeline to augment the user’s vision capabilities and enable better navigation, remote discovery and situational awareness in narrow and complex environments.

Mission management and progress monitoring:

During emergencies there is a strong need of effective coordination between the control centre and in-field units. FASTER will design and implement a novel mobile application for first responders able to: support inter-agency communication, manage mission tasking and progress monitoring, allow real-time reporting of incidents and of geo-located multimedia content to improve situational awareness. The mobile application will rely on a cloud-based back-end and front-end to provide data services and the user interface for decision makers at control room, respectively. The mobile application will interact with other components to provide responders the latest available data, including the location of K9 units. The mobile application developed within I-REACT project will be used as an initial asset to add advanced features such as voice control, gesture and activity recognition.

Wearable sensors and smart textiles:

FASTER will design and develop a prototype regarding the use of electronic devices (i.e., sensors) in wearable textiles, equipped inside an underwear of the first responder’s uniform, that will be able to collect biometric data (i.e., body temperature, heart rate). Also, sensors will be deployed on the First responder’s uniform (i.e., temperature, humidity). All these data will be communicated with the help of a smartphone to provide for edge computing capabilities and enhance the information gained at almost real-time, including accurate positioning, since calculations will take place at the edge of the network. On top of this, the design of the solution for FASTER will have to follow the existing security standards for the first responder’s uniforms, keeping in mind the protection of electronic parts of wearables, under extreme conditions that first responders may face.

Hand gesture recognition:

FASTER will provide a framework for wearable devices that will capture and identify arm/body movements exploiting Artificial Intelligence. It will provide non-visual/non-audible communication capabilities, translating movements or critical readings from paired wearable devices to coded messages, able to be communicated to cooperating agents on the field through vibrations on wearable devices and following Morse code. A Deep Learning model adapted to mobile devices will be implemented, to classify motion signals to predefined gestures in real-time. Given that often during operations communication infrastructure has collapsed, messages will be transmitted using IoT communication protocols (e.g., Bluetooth Low Energy; BLE).

K9 Behaviour Recognition:

In the context of FASTER, a Deep Learning model and a novel wearable device for K9s will be developed, featuring sensors (i.e. 3-axis accelerometer and gyroscope) able to capture and transmit in real time motion signals and situational status of K9s, while the former will be able to extract valuable information about K9 behaviour and translate it to specific messages that can be transmitted wirelessly through IoT communication protocols to first responders. At the same time, the definition of a communication protocol will be studied that will translate the K9’s behaviour (e.g., movement or bark) into a message addressed at the person in need in order to inform him/ her about the K9’s role and provide some useful tips that should be followed to facilitate the first responder’s work.

Gesture-based UxV navigation:

Within FASTER, different UxVs will be deployed by first responders on the site of a disaster in different scenarios. FASTER will provide customized hands-free solutions for UxV navigation and control, offering new capabilities and unprecedented ergonomics to first responders, while eliminating the need of separate and additional controller devices and reducing equipment clutter. Research will be directed towards developing touchless, easy to operate and human intuitive exocentric interfaces, based on body, head and hand gestures. Compared to egocentric approaches, a direct feed of the drone’s view will not be required, and first responders will be able to steer the UxVs via gaze and head movement recognition and navigate the space using hand gestures. Multifunctioning devices already present in the first responders’ gear, like monitoring smartwatches equipped with accelerometers and the AR headset, will be utilized for pose and body parts tracking, while AI algorithms will be employed to recognize a set of UxV-specific gestures, defined in collaboration with End-Users. Combined with augmented vision technologies offered by FASTER, first responders will be presented with a holistic approach for the precise UxV navigation, even in narrow and out of sight areas.

Robotic Platform:

The FASTER robotic vehicle will include a robotic platform, integrating different sensors (optical and thermal cameras, environmental, nuclear, biological, chemical, radiological and explosives) and, if required by the use cases, a robotic arm with several end-effectors options (grippers or tools). The AGV will provide the drone the next interconnections: tether, autonomous take off/land from AGV, battery charger. The wireless broadband mesh communications incorporating robust waveforms, supporting data exchange of large amounts of data (including video) and enabling multi-robot cooperation (UAVs). The advanced operation control console, with enhanced user interface and visualisation capabilities, including visual analytics and advanced controls for immersive user experience. Location and 3D modelling algorithm, generating advanced 3D mapping of the environment.